Actually, setup down here was carefully tested in regards of Mitaka Milestone 1 which hopefully will allow to verify solution provided by

Bug #1365473 Unable to create a router that's both HA and distributedDelorean repos now are supposed to be rebuilt and ready for testing via RDO deployment in a week after each Mitaka Milestone [

1 ] .

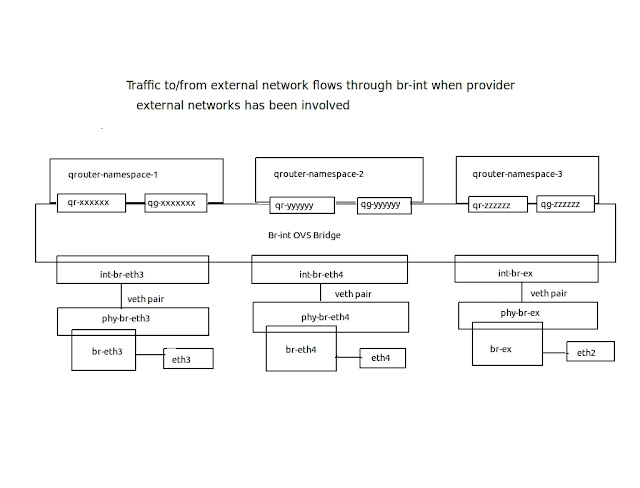

The DVR is providing direct (vice/versa) access to external network on Compute nodes. For instances with a floating IP addresses routing from project to external network is performed on the compute nodes.Thus DVR eliminates single point of failure and network congestion on Network Node.Agent_mode is set "dvr" in l3_agent.ini on Compute Nodes. Instances with a fixed IP address (only) still rely on the only network node for outbound connectivity via SNAT. Agent_mode is set "dvr_snat" in l3_agent.ini on Network Node. To support DVR each compute node is running neutron-l3-agent,neutron-metadata-agent,neutron-openvswitch-agent. DVR also requires L2population activated and ARP proxys running on Neutron L2 layer.

Setup

192.169.142.127 - Controller

192.169.142.147 -Network Node

192.169.142.137 - Compute Node

192.169.142.157 - Compute Node

*********************************************************************************

1. First Libvirt subnet "openstackvms" serves as management network.

All 3 VM are attached to this subnet . Attached to all nodes

**********************************************************************************

2. Second Libvirt subnet "public" serves for simulation external network Network Node && Compute node are attached to public, latter on "eth2" interface (belongs to "public") is supposed to be converted into OVS port of br-ex OVS bridges on Network Node and Compute nodes

***********************************************************************************

3.Third Libvirt subnet "vteps" serves for VTEPs endpoint simulation. Network and Compute Node VMs are attached to this subnet. ***********************************************************************************

# cat openstackvms.xml

<network>

<name>openstackvms</name>

<uuid>d0e9964a-f91a-40c0-b769-a609aee41bf2</uuid>

<forward mode='nat'>

<nat>

<port start='1024' end='65535'/>

</nat>

</forward>

<bridge name='virbr1' stp='on' delay='0' />

<mac address='52:54:00:60:f8:6d'/>

<ip address='192.169.142.1' netmask='255.255.255.0'>

<dhcp>

<range start='192.169.142.2' end='192.169.142.254' />

</dhcp>

</ip>

</network>

# cat public.xml

<network>

<name>public</name>

<uuid>d1e9965b-f92c-40c1-b749-b609aed42cf2</uuid>

<forward mode='nat'>

<nat>

<port start='1024' end='65535'/>

</nat>

</forward>

<bridge name='virbr2' stp='on' delay='0' />

<mac address='52:54:00:60:f8:6d'/>

<ip address='172.24.4.225' netmask='255.255.255.240'>

<dhcp>

<range start='172.24.4.226' end='172.24.4.238' />

</dhcp>

</ip>

</network>

# cat vteps.xml

<network>

<name>vteps</name>

<uuid>d0e9965b-f92c-40c1-b749-b609aed42cf2</uuid>

<forward mode='nat'>

<nat>

<port start='1024' end='65535'/>

</nat>

</forward>

<bridge name='virbr2' stp='on' delay='0' />

<mac address='52:54:00:60:f8:6d'/>

<ip address='10.0.0.1' netmask='255.255.255.0'>

<dhcp>

<range start='10.0.0.1' end='10.0.0.254' />

</dhcp>

</ip>

</network>

Four CentOS 7.1 VMs (4 GB RAM, 4 VCPU ) has been built for testing

at Fedora 23 KVM Hypervisor.

Controller node - one VNIC (eth0 for mgmt network )

Network node - three VNICs ( eth0 mgmt, eth1 vteps, eth2 public )

2xCompute node - three VNICs ( eth0 mgmt, eth1 vteps, eth2 public )

*************************************************

Installation answer-file : answer4Node.txt

*************************************************

[general]

CONFIG_SSH_KEY=/root/.ssh/id_rsa.pub

CONFIG_DEFAULT_PASSWORD=

CONFIG_MARIADB_INSTALL=y

CONFIG_GLANCE_INSTALL=y

CONFIG_CINDER_INSTALL=y

CONFIG_NOVA_INSTALL=y

CONFIG_NEUTRON_INSTALL=y

CONFIG_HORIZON_INSTALL=y

CONFIG_SWIFT_INSTALL=y

CONFIG_CEILOMETER_INSTALL=y

CONFIG_HEAT_INSTALL=n

CONFIG_CLIENT_INSTALL=y

CONFIG_NTP_SERVERS=

CONFIG_NAGIOS_INSTALL=y

EXCLUDE_SERVERS=

CONFIG_DEBUG_MODE=n

CONFIG_CONTROLLER_HOST=192.169.142.127

CONFIG_COMPUTE_HOSTS=192.169.142.137,192.169.142.157CONFIG_NETWORK_HOSTS=192.169.142.147CONFIG_VMWARE_BACKEND=n

CONFIG_UNSUPPORTED=n

CONFIG_VCENTER_HOST=

CONFIG_VCENTER_USER=

CONFIG_VCENTER_PASSWORD=

CONFIG_VCENTER_CLUSTER_NAME=

CONFIG_STORAGE_HOST=192.169.142.127

CONFIG_USE_EPEL=y

CONFIG_REPO=

CONFIG_RH_USER=

CONFIG_SATELLITE_URL=

CONFIG_RH_PW=

CONFIG_RH_OPTIONAL=y

CONFIG_RH_PROXY=

CONFIG_RH_PROXY_PORT=

CONFIG_RH_PROXY_USER=

CONFIG_RH_PROXY_PW=

CONFIG_SATELLITE_USER=

CONFIG_SATELLITE_PW=

CONFIG_SATELLITE_AKEY=

CONFIG_SATELLITE_CACERT=

CONFIG_SATELLITE_PROFILE=

CONFIG_SATELLITE_FLAGS=

CONFIG_SATELLITE_PROXY=

CONFIG_SATELLITE_PROXY_USER=

CONFIG_SATELLITE_PROXY_PW=

CONFIG_AMQP_BACKEND=rabbitmq

CONFIG_AMQP_HOST=192.169.142.127

CONFIG_AMQP_ENABLE_SSL=n

CONFIG_AMQP_ENABLE_AUTH=n

CONFIG_AMQP_NSS_CERTDB_PW=PW_PLACEHOLDER

CONFIG_AMQP_SSL_PORT=5671

CONFIG_AMQP_SSL_CERT_FILE=/etc/pki/tls/certs/amqp_selfcert.pem

CONFIG_AMQP_SSL_KEY_FILE=/etc/pki/tls/private/amqp_selfkey.pem

CONFIG_AMQP_SSL_SELF_SIGNED=y

CONFIG_AMQP_AUTH_USER=amqp_user

CONFIG_AMQP_AUTH_PASSWORD=PW_PLACEHOLDER

CONFIG_MARIADB_HOST=192.169.142.127

CONFIG_MARIADB_USER=root

CONFIG_MARIADB_PW=7207ae344ed04957

CONFIG_KEYSTONE_DB_PW=abcae16b785245c3

CONFIG_KEYSTONE_REGION=RegionOne

CONFIG_KEYSTONE_ADMIN_TOKEN=3ad2de159f9649afb0c342ba57e637d9

CONFIG_KEYSTONE_ADMIN_PW=7049f834927e4468

CONFIG_KEYSTONE_DEMO_PW=bf737b785cfa4398

CONFIG_KEYSTONE_TOKEN_FORMAT=UUID

CONFIG_KEYSTONE_SERVICE_NAME=httpdCONFIG_GLANCE_DB_PW=41264fc52ffd4fe8

CONFIG_GLANCE_KS_PW=f6a9398960534797

CONFIG_GLANCE_BACKEND=file

CONFIG_CINDER_DB_PW=5ac08c6d09ba4b69

CONFIG_CINDER_KS_PW=c8cb1ecb8c2b4f6f

CONFIG_CINDER_BACKEND=lvm

CONFIG_CINDER_VOLUMES_CREATE=y

CONFIG_CINDER_VOLUMES_SIZE=5G

CONFIG_CINDER_GLUSTER_MOUNTS=

CONFIG_CINDER_NFS_MOUNTS=

CONFIG_CINDER_NETAPP_LOGIN=

CONFIG_CINDER_NETAPP_PASSWORD=

CONFIG_CINDER_NETAPP_HOSTNAME=

CONFIG_CINDER_NETAPP_SERVER_PORT=80

CONFIG_CINDER_NETAPP_STORAGE_FAMILY=ontap_cluster

CONFIG_CINDER_NETAPP_TRANSPORT_TYPE=http

CONFIG_CINDER_NETAPP_STORAGE_PROTOCOL=nfs

CONFIG_CINDER_NETAPP_SIZE_MULTIPLIER=1.0

CONFIG_CINDER_NETAPP_EXPIRY_THRES_MINUTES=720

CONFIG_CINDER_NETAPP_THRES_AVL_SIZE_PERC_START=20

CONFIG_CINDER_NETAPP_THRES_AVL_SIZE_PERC_STOP=60

CONFIG_CINDER_NETAPP_NFS_SHARES_CONFIG=

CONFIG_CINDER_NETAPP_VOLUME_LIST=

CONFIG_CINDER_NETAPP_VFILER=

CONFIG_CINDER_NETAPP_VSERVER=

CONFIG_CINDER_NETAPP_CONTROLLER_IPS=

CONFIG_CINDER_NETAPP_SA_PASSWORD=

CONFIG_CINDER_NETAPP_WEBSERVICE_PATH=/devmgr/v2

CONFIG_CINDER_NETAPP_STORAGE_POOLS=

CONFIG_NOVA_DB_PW=1e1b5aeeeaf342a8

CONFIG_NOVA_KS_PW=d9583177a2444f06

CONFIG_NOVA_SCHED_CPU_ALLOC_RATIO=16.0

CONFIG_NOVA_SCHED_RAM_ALLOC_RATIO=1.5

CONFIG_NOVA_COMPUTE_MIGRATE_PROTOCOL=tcp

CONFIG_NOVA_COMPUTE_PRIVIF=eth1

CONFIG_NOVA_NETWORK_MANAGER=nova.network.manager.FlatDHCPManager

CONFIG_NOVA_NETWORK_PUBIF=eth0

CONFIG_NOVA_NETWORK_PRIVIF=eth1CONFIG_NOVA_NETWORK_FIXEDRANGE=192.168.32.0/22

CONFIG_NOVA_NETWORK_FLOATRANGE=10.3.4.0/22

CONFIG_NOVA_NETWORK_DEFAULTFLOATINGPOOL=nova

CONFIG_NOVA_NETWORK_AUTOASSIGNFLOATINGIP=n

CONFIG_NOVA_NETWORK_VLAN_START=100

CONFIG_NOVA_NETWORK_NUMBER=1

CONFIG_NOVA_NETWORK_SIZE=255

CONFIG_NEUTRON_KS_PW=808e36e154bd4cee

CONFIG_NEUTRON_DB_PW=0e2b927a21b44737

CONFIG_NEUTRON_L3_EXT_BRIDGE=br-ex

CONFIG_NEUTRON_L2_PLUGIN=ml2

CONFIG_NEUTRON_METADATA_PW=a965cd23ed2f4502

CONFIG_LBAAS_INSTALL=n

CONFIG_NEUTRON_METERING_AGENT_INSTALL=n

CONFIG_NEUTRON_FWAAS=n

CONFIG_NEUTRON_ML2_TYPE_DRIVERS=vxlan

CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES=vxlan

CONFIG_NEUTRON_ML2_MECHANISM_DRIVERS=openvswitch

CONFIG_NEUTRON_ML2_FLAT_NETWORKS=*

CONFIG_NEUTRON_ML2_VLAN_RANGES=

CONFIG_NEUTRON_ML2_TUNNEL_ID_RANGES=1001:2000

CONFIG_NEUTRON_ML2_VXLAN_GROUP=239.1.1.2

CONFIG_NEUTRON_ML2_VNI_RANGES=1001:2000

CONFIG_NEUTRON_L2_AGENT=openvswitch

CONFIG_NEUTRON_LB_TENANT_NETWORK_TYPE=local

CONFIG_NEUTRON_LB_VLAN_RANGES=

CONFIG_NEUTRON_LB_INTERFACE_MAPPINGS=

CONFIG_NEUTRON_OVS_TENANT_NETWORK_TYPE=vxlan

CONFIG_NEUTRON_OVS_VLAN_RANGES=

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-ex

CONFIG_NEUTRON_OVS_BRIDGE_IFACES=

CONFIG_NEUTRON_OVS_TUNNEL_RANGES=1001:2000

CONFIG_NEUTRON_OVS_TUNNEL_IF=eth1

CONFIG_NEUTRON_OVS_VXLAN_UDP_PORT=4789CONFIG_HORIZON_SSL=n

CONFIG_SSL_CERT=

CONFIG_SSL_KEY=

CONFIG_SSL_CACHAIN=

CONFIG_SWIFT_KS_PW=8f75bfd461234c30

CONFIG_SWIFT_STORAGES=

CONFIG_SWIFT_STORAGE_ZONES=1

CONFIG_SWIFT_STORAGE_REPLICAS=1

CONFIG_SWIFT_STORAGE_FSTYPE=ext4

CONFIG_SWIFT_HASH=a60aacbedde7429a

CONFIG_SWIFT_STORAGE_SIZE=2G

CONFIG_PROVISION_DEMO=y

CONFIG_PROVISION_TEMPEST=n

CONFIG_PROVISION_TEMPEST_USER=

CONFIG_PROVISION_TEMPEST_USER_PW=44faa4ebc3da4459

CONFIG_PROVISION_DEMO_FLOATRANGE=172.24.4.224/28

CONFIG_PROVISION_TEMPEST_REPO_URI=https://github.com/openstack/tempest.git

CONFIG_PROVISION_TEMPEST_REPO_REVISION=master

CONFIG_PROVISION_ALL_IN_ONE_OVS_BRIDGE=n

CONFIG_HEAT_DB_PW=PW_PLACEHOLDER

CONFIG_HEAT_AUTH_ENC_KEY=fc3fb7fee61e46b0

CONFIG_HEAT_KS_PW=PW_PLACEHOLDER

CONFIG_HEAT_CLOUDWATCH_INSTALL=n

CONFIG_HEAT_USING_TRUSTS=y

CONFIG_HEAT_CFN_INSTALL=n

CONFIG_HEAT_DOMAIN=heat

CONFIG_HEAT_DOMAIN_ADMIN=heat_admin

CONFIG_HEAT_DOMAIN_PASSWORD=PW_PLACEHOLDER

CONFIG_CEILOMETER_SECRET=19ae0e7430174349

CONFIG_CEILOMETER_KS_PW=337b08d4b3a44753

CONFIG_MONGODB_HOST=192.169.142.127

CONFIG_NAGIOS_PW=02f168ee8edd44e4

**************************************

At this point run on Controller:-

**************************************

# yum -y install centos-release-openstack-liberty

# yum -y install openstack-packstack

# packstack --answer-file=./answer4Node.txt

***************************************************************************

After packstack install perform on Network && Compute Nodes

***************************************************************************

[root@ip-192-169-142-147 network-scripts]# cat ifcfg-br-exDEVICE="br-ex"

BOOTPROTO="static"

IPADDR="172.24.4.230"NETMASK="255.255.255.240"

DNS1="83.221.202.254"

BROADCAST="172.24.4.239"

GATEWAY="172.24.4.225"

NM_CONTROLLED="no"

TYPE="OVSIntPort"

OVS_BRIDGE=br-ex

DEVICETYPE="ovs"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="yes"

IPV6INIT=no

[root@ip-192-169-142-147 network-scripts]# cat ifcfg-eth2DEVICE="eth2"

# HWADDR=00:22:15:63:E4:E2

ONBOOT="yes"

TYPE="OVSPort"

DEVICETYPE="ovs"

OVS_BRIDGE=br-ex

NM_CONTROLLED=no

IPV6INIT=no

*********************************

Switch to network service

*********************************

# chkconfig network on# systemctl stop NetworkManager# systemctl disable NetworkManager # reboot[root@ip-192-169-142-137 network-scripts]# cat ifcfg-br-exDEVICE="br-ex"

BOOTPROTO="static"

IPADDR="172.24.4.229"NETMASK="255.255.255.240"

DNS1="83.221.202.254"

BROADCAST="172.24.4.239"

GATEWAY="172.24.4.225"

NM_CONTROLLED="no"

TYPE="OVSIntPort"

OVS_BRIDGE=br-ex

DEVICETYPE="ovs"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="yes"

IPV6INIT=no

[root@ip-192-169-142-137 network-scripts]# cat ifcfg-eth2DEVICE="eth2"

# HWADDR=00:22:15:63:E4:E2

ONBOOT="yes"

TYPE="OVSPort"

DEVICETYPE="ovs"

OVS_BRIDGE=br-ex

NM_CONTROLLED=no

IPV6INIT=no

*********************************

Switch to network service

*********************************

# chkconfig network on# systemctl stop NetworkManager# systemctl disable NetworkManager # reboot[root@ip-192-169-142-157 network-scripts]# cat ifcfg-br-exDEVICE="br-ex"

BOOTPROTO="static"

IPADDR="172.24.4.238"NETMASK="255.255.255.240"

DNS1="83.221.202.254"

BROADCAST="172.24.4.239"

GATEWAY="172.24.4.225"

NM_CONTROLLED="no"

TYPE="OVSIntPort"

OVS_BRIDGE=br-ex

DEVICETYPE="ovs"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="yes"

IPV6INIT=no

[root@ip-192-169-142-157 network-scripts]# cat ifcfg-eth2DEVICE="eth2"

# HWADDR=00:22:15:63:E4:E2

ONBOOT="yes"

TYPE="OVSPort"

DEVICETYPE="ovs"

OVS_BRIDGE=br-ex

NM_CONTROLLED=no

IPV6INIT=no

*********************************

Switch to network service

*********************************

# chkconfig network on# systemctl stop NetworkManager# systemctl disable NetworkManager # reboot******************

Network Node

******************

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns

snat-00223343-b771-4b7a-bbc1-10c5fe924a12

qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12

qdhcp-3371ea3f-35f5-418c-8d07-82a2a54b5c1d

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec snat-00223343-b771-4b7a-bbc1-10c5fe924a12 ip a |grep "inet "

inet 127.0.0.1/8 scope host lo

inet 70.0.0.13/24 brd 70.0.0.255 scope global sg-67571326-46

inet

172.24.4.236/28 brd 172.24.4.239 scope global qg-57d45794-46

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec snat-00223343-b771-4b7a-bbc1-10c5fe924a12 iptables-save | grep SNAT

-A neutron-l3-agent-snat -o qg-57d45794-46 -j SNAT --to-source

172.24.4.236-A neutron-l3-agent-snat -m mark ! --mark 0x2/0xffff -m conntrack --ctstate DNAT -j SNAT --to-source

172.24.4.236[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip a |grep "inet "

inet 127.0.0.1/8 scope host lo

inet 70.0.0.1/24 brd 70.0.0.255 scope global qr-bdd297b1-05

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip rule ls

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

1174405121: from 70.0.0.1/24 lookup 1174405121

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip route show table all

default via 70.0.0.13 dev qr-bdd297b1-05 table 1174405121 70.0.0.0/24 dev qr-bdd297b1-05 proto kernel scope link src 70.0.0.1

broadcast 70.0.0.0 dev qr-bdd297b1-05 table local proto kernel scope link src 70.0.0.1

local 70.0.0.1 dev qr-bdd297b1-05 table local proto kernel scope host src 70.0.0.1

broadcast 70.0.0.255 dev qr-bdd297b1-05 table local proto kernel scope link src 70.0.0.1

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ifconfig

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 0 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

qr-bdd297b1-05: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 70.0.0.1 netmask 255.255.255.0 broadcast 70.0.0.255 inet6 fe80::f816:3eff:fedf:c80b prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:df:c8:0b txqueuelen 0 (Ethernet)

RX packets 19 bytes 1530 (1.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10 bytes 864 (864.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec snat-00223343-b771-4b7a-bbc1-10c5fe924a12 ifconfig

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 0 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

qg-57d45794-46: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet

172.24.4.236 netmask 255.255.255.240 broadcast 172.24.4.239

inet6 fe80::f816:3eff:fec7:1583 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:c7:15:83 txqueuelen 0 (Ethernet)

RX packets 25 bytes 1698 (1.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13 bytes 1074 (1.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

sg-67571326-46: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 70.0.0.13 netmask 255.255.255.0 broadcast 70.0.0.255

inet6 fe80::f816:3eff:fed1:69b4 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:d1:69:b4 txqueuelen 0 (Ethernet)

RX packets 11 bytes 914 (914.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 14 bytes 1140 (1.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Neutron agents running on Network Node ******************************************************************************

Neutron.conf should be the same on Controller and Network nodes******************************************************************************

[root@ip-192-169-142-147 neutron(keystone_admin)]# cat neutron.conf | grep -v ^#|grep -v ^$

[DEFAULT]

verbose = True

router_distributed = Truedebug = False

state_path = /var/lib/neutron

use_syslog = False

use_stderr = True

log_dir =/var/log/neutron

bind_host = 0.0.0.0

bind_port = 9696

core_plugin =neutron.plugins.ml2.plugin.Ml2Plugin

service_plugins =router

auth_strategy = keystone

base_mac = fa:16:3e:00:00:00

dvr_base_mac = fa:16:3f:00:00:00mac_generation_retries = 16

dhcp_lease_duration = 86400

dhcp_agent_notification = True

allow_bulk = True

allow_pagination = False

allow_sorting = False

allow_overlapping_ips = True

advertise_mtu = False

dhcp_agents_per_network = 1

use_ssl = False

rpc_response_timeout=60

rpc_backend=rabbit

control_exchange=neutron

lock_path=/var/lib/neutron/lock

[matchmaker_redis]

[matchmaker_ring]

[quotas]

[agent]

root_helper = sudo neutron-rootwrap /etc/neutron/rootwrap.conf

report_interval = 30

[keystone_authtoken]

auth_uri = http://192.169.142.127:5000/v2.0

identity_uri = http://192.169.142.127:35357

admin_tenant_name = services

admin_user = neutron

admin_password = 808e36e154bd4cee

[database]

[nova]

[oslo_concurrency]

[oslo_policy]

[oslo_messaging_amqp]

[oslo_messaging_qpid]

[oslo_messaging_rabbit]

kombu_reconnect_delay = 1.0

rabbit_host = 192.169.142.127

rabbit_port = 5672

rabbit_hosts = 192.169.142.127:5672

rabbit_use_ssl = False

rabbit_userid = guest

rabbit_password = guest

rabbit_virtual_host = /

rabbit_ha_queues = False

heartbeat_rate=2

heartbeat_timeout_threshold=0

[qos]

[root@ip-192-169-142-147 neutron(keystone_admin)]# cat l3_agent.ini | grep -v ^#|grep -v ^$

[DEFAULT]

debug = False

interface_driver =neutron.agent.linux.interface.OVSInterfaceDriver

handle_internal_only_routers = True

external_network_bridge = br-ex

metadata_port = 9697

send_arp_for_ha = 3

periodic_interval = 40

periodic_fuzzy_delay = 5

enable_metadata_proxy = True

router_delete_namespaces = False

# Set for Network Node

agent_mode = dvr_snat[AGENT]

***********************************************************************

Next files are supposed to be replicated to all compute nodes

***********************************************************************

[root@ip-192-169-142-147 neutron(keystone_admin)]# cat

metadata_agent.ini | grep -v ^#|grep -v ^$

[DEFAULT]

debug = False

auth_url = http://192.169.142.127:5000/v2.0auth_region = RegionOne

auth_insecure = False

admin_tenant_name = services

admin_user = neutron

admin_password = 808e36e154bd4cee

nova_metadata_ip = 192.169.142.127

nova_metadata_port = 8775

nova_metadata_protocol = http

metadata_proxy_shared_secret =a965cd23ed2f4502

metadata_workers =4

metadata_backlog = 4096

cache_url = memory://?default_ttl=5

[AGENT]

[root@ip-192-169-142-147 ml2(keystone_admin)]# cat

ml2_conf.ini | grep -v ^#|grep -v ^$

[ml2]

type_drivers = vxlan

tenant_network_types = vxlan

mechanism_drivers =openvswitch,l2populationpath_mtu = 0

[ml2_type_flat]

[ml2_type_vlan]

[ml2_type_gre]

[ml2_type_vxlan]

vni_ranges =1001:2000

vxlan_group =239.1.1.2

[ml2_type_geneve]

[securitygroup]

enable_security_group = True

[agent]

l2_population=True [root@ip-192-169-142-147 ml2(keystone_admin)]# cat

openvswitch_agent.ini | grep -v ^#|grep -v ^$

[ovs]

integration_bridge = br-int

tunnel_bridge = br-tun

local_ip =10.0.0.147 <== updated corresponently

bridge_mappings =physnet1:br-ex

enable_tunneling=True

[agent]

polling_interval = 2

tunnel_types =vxlan

vxlan_udp_port =4789

l2_population = True

arp_responder = Trueprevent_arp_spoofing = True

enable_distributed_routing = Truedrop_flows_on_start=False

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

******************************

On Compute Node

******************************

[root@ip-192-169-142-137 neutron]# cat neutron.conf | grep -v ^#|grep -v ^$

[DEFAULT]

verbose = True

debug = False

state_path = /var/lib/neutron

use_syslog = False

use_stderr = True

log_dir =/var/log/neutron

bind_host = 0.0.0.0

bind_port = 9696

core_plugin =neutron.plugins.ml2.plugin.Ml2Plugin

service_plugins =router

auth_strategy = keystone

base_mac = fa:16:3e:00:00:00

mac_generation_retries = 16

dhcp_lease_duration = 86400

dhcp_agent_notification = True

allow_bulk = True

allow_pagination = False

allow_sorting = False

allow_overlapping_ips = True

advertise_mtu = False

dhcp_agents_per_network = 1

use_ssl = False

rpc_response_timeout=60

rpc_backend=rabbit

control_exchange=neutron

lock_path=/var/lib/neutron/lock

[matchmaker_redis]

[matchmaker_ring]

[quotas]

[agent]

root_helper = sudo neutron-rootwrap /etc/neutron/rootwrap.conf

report_interval = 30

[keystone_authtoken]

auth_uri = http://127.0.0.1:35357/v2.0/

identity_uri = http://127.0.0.1:5000

admin_tenant_name = %SERVICE_TENANT_NAME%

admin_user = %SERVICE_USER%

admin_password = %SERVICE_PASSWORD%

[database]

[nova]

[oslo_concurrency]

[oslo_policy]

[oslo_messaging_amqp]

[oslo_messaging_qpid]

[oslo_messaging_rabbit]

kombu_reconnect_delay = 1.0

rabbit_host = 192.169.142.127

rabbit_port = 5672

rabbit_hosts = 192.169.142.127:5672

rabbit_use_ssl = False

rabbit_userid = guest

rabbit_password = guest

rabbit_virtual_host = /

rabbit_ha_queues = False

heartbeat_rate=2

heartbeat_timeout_threshold=0

[qos]

[root@ip-192-169-142-137 neutron]# cat l3_agent.ini | grep -v ^#|grep -v ^$

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

# Set for Compute Node

agent_mode = dvr[AGENT]

**********************************************************************************

On each Compute node neutron-l3-agent and neutron-metadata-agent are

supposed to be started.

**********************************************************************************

# yum install openstack-neutron-ml2 # systemctl start neutron-l3-agent# systemctl start neutron-metadata-agent# systemctl enable neutron-l3-agent# systemctl enable neutron-metadata-agent[root@ip-192-169-142-137 ml2]# cat ml2_conf.ini | grep -v ^#|grep -v ^$

[ml2]

type_drivers = vxlan

tenant_network_types = vxlan

mechanism_drivers =openvswitch,l2populationpath_mtu = 0

[ml2_type_flat]

[ml2_type_vlan]

[ml2_type_gre]

[ml2_type_vxlan]

vni_ranges =1001:2000

vxlan_group =239.1.1.2

[ml2_type_geneve]

[securitygroup]

enable_security_group = True

[agent]

l2_population=True [root@ip-192-169-142-137 ml2]# cat openvswitch_agent.ini | grep -v ^#|grep -v ^$

[ovs]

integration_bridge = br-int

tunnel_bridge = br-tun

local_ip =10.0.0.137

bridge_mappings =physnet1:br-ex

enable_tunneling=True

[agent]

polling_interval = 2

tunnel_types =vxlan

vxlan_udp_port =4789

l2_population = True

arp_responder = Trueprevent_arp_spoofing = True

enable_distributed_routing = Truedrop_flows_on_start=False

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

***********************

Compute Node

***********************

[root@ip-192-169-142-157 ~]# ip netns

fip-115edb73-ebe2-4e48-811f-4823fc19d9b6

qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12

[root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip a | grep "inet "

inet 127.0.0.1/8 scope host lo

inet 169.254.31.28/31 scope global rfp-00223343-b inet 172.24.4.231/32 brd 172.24.4.231 scope global rfp-00223343-b

inet 172.24.4.233/32 brd 172.24.4.233 scope global rfp-00223343-b

inet 70.0.0.1/24 brd 70.0.0.255 scope global qr-bdd297b1-05

[root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 iptables-save -t nat | grep "^-A"|grep l3-agent

-A PREROUTING -j neutron-l3-agent-PREROUTING

-A OUTPUT -j neutron-l3-agent-OUTPUT

-A POSTROUTING -j neutron-l3-agent-POSTROUTING

-A neutron-l3-agent-OUTPUT -d 172.24.4.231/32 -j DNAT --to-destination 70.0.0.15-A neutron-l3-agent-OUTPUT -d 172.24.4.233/32 -j DNAT --to-destination 70.0.0.17-A neutron-l3-agent-POSTROUTING ! -i rfp-00223343-b ! -o rfp-00223343-b -m conntrack ! --ctstate DNAT -j ACCEPT

-A neutron-l3-agent-PREROUTING -d 169.254.169.254/32 -i qr-+ -p tcp -m tcp --dport 80 -j REDIRECT --to-ports 9697

-A neutron-l3-agent-PREROUTING -d 172.24.4.231/32 -j DNAT --to-destination 70.0.0.15-A neutron-l3-agent-PREROUTING -d 172.24.4.233/32 -j DNAT --to-destination 70.0.0.17-A neutron-l3-agent-float-snat -s 70.0.0.15/32 -j SNAT --to-source 172.24.4.231

-A neutron-l3-agent-float-snat -s 70.0.0.17/32 -j SNAT --to-source 172.24.4.233

-A neutron-l3-agent-snat -j neutron-l3-agent-float-snat

-A neutron-postrouting-bottom -m comment --comment "Perform source NAT on outgoing traffic." -j neutron-l3-agent-snat

[root@ip-192-169-142-157 ~]# ip netns exec fip-115edb73-ebe2-4e48-811f-4823fc19d9b6 ip a | grep "inet "

inet 127.0.0.1/8 scope host lo

inet 169.254.31.29/31 scope global fpr-00223343-b

inet 172.24.4.237/28 brd 172.24.4.239 scope global fg-d00d8427-25

[root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip rule ls

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

57480: from 70.0.0.17 lookup 16 57481: from 70.0.0.15 lookup 16 1174405121: from 70.0.0.1/24 lookup 1174405121 [root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip route show table 16

default via 169.254.31.29 dev rfp-00223343-b [root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip route

70.0.0.0/24 dev qr-bdd297b1-05 proto kernel scope link src 70.0.0.1

169.254.31.28/31 dev rfp-00223343-b proto kernel scope link src 169.254.31.28

[root@ip-192-169-142-157 ~]# ip netns exec fip-115edb73-ebe2-4e48-811f-4823fc19d9b6 ip route

default via 172.24.4.225 dev fg-d00d8427-25

169.254.31.28/31 dev fpr-00223343-b proto kernel scope link src 169.254.31.29 172.24.4.224/28 dev fg-d00d8427-25 proto kernel scope link src 172.24.4.237

172.24.4.231 via 169.254.31.28 dev fpr-00223343-b 172.24.4.233 via 169.254.31.28 dev fpr-00223343-b [root@ip-192-169-142-157 ~]# ip netns exec fip-115edb73-ebe2-4e48-811f-4823fc19d9b6 ifconfig

fg-d00d8427-25: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.24.4.237 netmask 255.255.255.240 broadcast 172.24.4.239

inet6 fe80::f816:3eff:fe10:3928 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:10:39:28 txqueuelen 0 (Ethernet)

RX packets 46 bytes 4382 (4.2 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 16 bytes 1116 (1.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

fpr-00223343-b: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 169.254.31.29 netmask 255.255.255.254 broadcast 0.0.0.0 inet6 fe80::d88d:7ff:fe1c:23a5 prefixlen 64 scopeid 0x20<link>

ether da:8d:07:1c:23:a5 txqueuelen 1000 (Ethernet)

RX packets 7 bytes 738 (738.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7 bytes 738 (738.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 0 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ifconfig

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 0 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

qr-bdd297b1-05: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 70.0.0.1 netmask 255.255.255.0 broadcast 70.0.0.255

inet6 fe80::f816:3eff:fedf:c80b prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:df:c8:0b txqueuelen 0 (Ethernet)

RX packets 9 bytes 746 (746.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10 bytes 864 (864.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

rfp-00223343-b: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 169.254.31.28 netmask 255.255.255.254 broadcast 0.0.0.0 inet6 fe80::5c77:1eff:fe6b:5a21 prefixlen 64 scopeid 0x20<link>

ether 5e:77:1e:6b:5a:21 txqueuelen 1000 (Ethernet)

RX packets 7 bytes 738 (738.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7 bytes 738 (738.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

***********************

Network Node

***********************

[root@ip-192-169-142-147 ~(keystone_admin)]# ovs-vsctl show

738cdbf4-4dde-4887-a95e-cc994702138e

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port "eth2"

Interface "eth2"

Port "qg-57d45794-46"

Interface "qg-57d45794-46"

type: internal

Bridge br-tun

fail_mode: secure

Port "vxlan-0a000089"

Interface "vxlan-0a000089"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.0.0.147", out_key=flow, remote_ip="10.0.0.137"}

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "vxlan-0a00009d"

Interface "vxlan-0a00009d"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="10.0.0.147", out_key=flow, remote_ip="10.0.0.157"}

Bridge br-int

fail_mode: secure

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port "qr-bdd297b1-05" <=========

tag: 1

Interface "qr-bdd297b1-05"

type: internal

Port "sg-67571326-46" <=========

tag: 1

Interface "sg-67571326-46"

type: internal Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Port br-int

Interface br-int

type: internal

Port "tap06dd3fa7-c0"

tag: 1

Interface "tap06dd3fa7-c0"

type: internal

ovs_version: "2.4.0"

***********************

SNAT forwarding

***********************

==== Compute Node ====

[root@ip-192-169-142-157 ~]# ip netns

fip-115edb73-ebe2-4e48-811f-4823fc19d9b6

qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12

[root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip rule ls

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

57480: from 70.0.0.17 lookup 16

57481: from 70.0.0.15 lookup 16

1174405121: from 70.0.0.1/24 lookup 1174405121

[root@ip-192-169-142-157 ~]# ip netns exec qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12 ip route show table all

default via 70.0.0.13 dev qr-bdd297b1-05 table 1174405121 <====default via 169.254.31.29 dev rfp-00223343-b table 16

70.0.0.0/24 dev qr-bdd297b1-05 proto kernel scope link src 70.0.0.1

169.254.31.28/31 dev rfp-00223343-b proto kernel scope link src 169.254.31.28

====Network Node ====

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns

snat-00223343-b771-4b7a-bbc1-10c5fe924a12

qrouter-00223343-b771-4b7a-bbc1-10c5fe924a12

qdhcp-3371ea3f-35f5-418c-8d07-82a2a54b5c1d

[root@ip-192-169-142-147 ~(keystone_admin)]# ip netns exec snat-00223343-b771-4b7a-bbc1-10c5fe924a12 ifconfig

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 0 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

qg-57d45794-46: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.24.4.236 netmask 255.255.255.240 broadcast 172.24.4.239

inet6 fe80::f816:3eff:fec7:1583 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:c7:15:83 txqueuelen 0 (Ethernet)

RX packets 49 bytes 4463 (4.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13 bytes 1074 (1.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

sg-67571326-46: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 70.0.0.13 netmask 255.255.255.0 broadcast 70.0.0.255 inet6 fe80::f816:3eff:fed1:69b4 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:d1:69:b4 txqueuelen 0 (Ethernet)

RX packets 11 bytes 914 (914.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 14 bytes 1140 (1.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

*********************************************************************

SNAT sample VM with no FIP downloading data from Internet

`iftop -i eth2` snapshot on Network Node. *********************************************************************

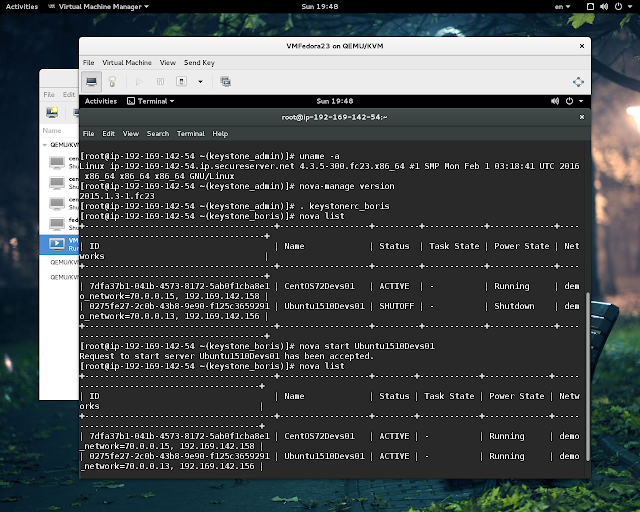

Download running on VM with FIP on 192.169.142.157

Download running on VM with FIP on 192.169.142.137

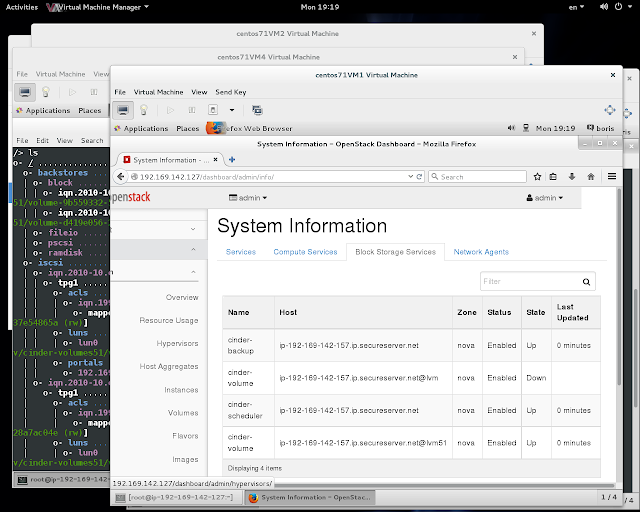

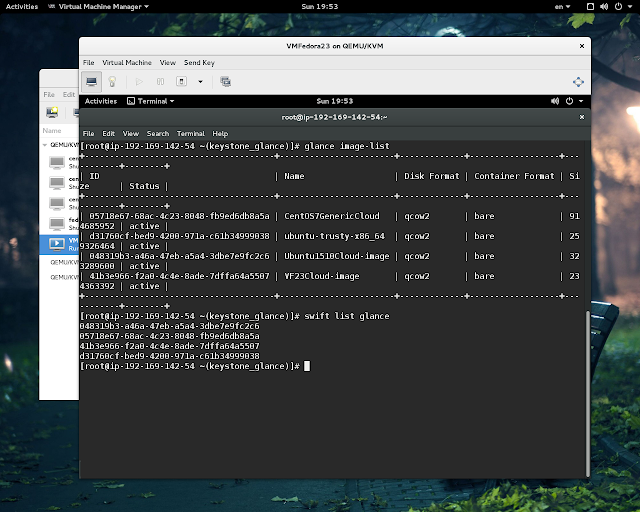

System information

![]()